The next generation of Autocorrect.

Autocorrect that reads your lips — typing frustration ends here.

Typing on small portrait phone keyboards remains a major source of errors and frustration. LipConfirm fuses keystrokes with real-time lip cues (and optional whisper-level audio) to meaningfully improve the accuracy of autocorrect.

Silent, easy input

Combines subtle lip cues with normal typing to boost accuracy. No need to talk out loud. Many people already mouth words naturally while they type.

A new modality

Adds a missing signal layer that helps resolve high-ambiguity or messy typing cases that even today’s top keyboards—Gboard, iOS, SwiftKey, and Samsung—still struggle to interpret.

Private by design

All processing runs on-device with a lightweight engine. Camera and mic stay off unless you opt in, and frames are discarded instantly.

Optimized for mobile

Portrait-mode phone keyboards cause the most errors; LipConfirm reduces them dramatically with fewer suggestion pop-ups and a smoother typing flow.

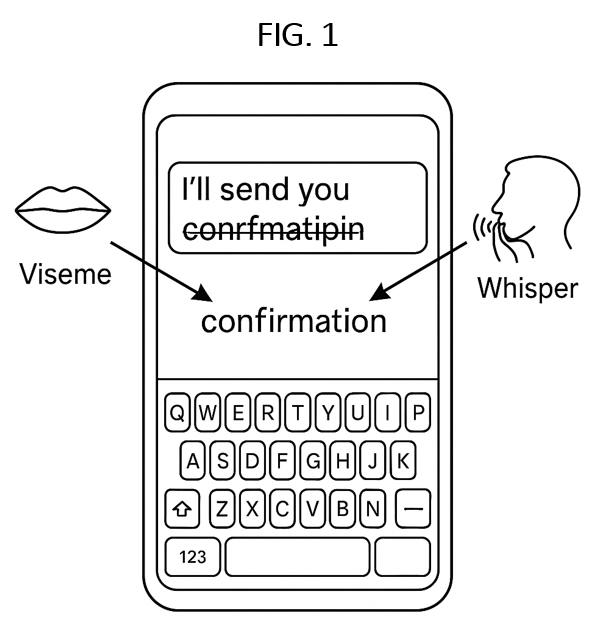

How it works

Capture

Keystrokes + lip cues are captured locally. Optional: whisper-level audio can also be taken in as an extra signal.

Neural fusion

Our on-device ML fusion model integrates typed input with lip and optional whisper signals, enhancing existing autocorrects to select intended words that never appear on the default suggestion list. It also outputs a calibrated confidence score to auto-commit when appropriate, reducing suggestion prompts.

Seamless correction

When confidence clears a threshold, the correct word replaces the garble without interrupting your flow. This often prevents the suggestion list from appearing at all.

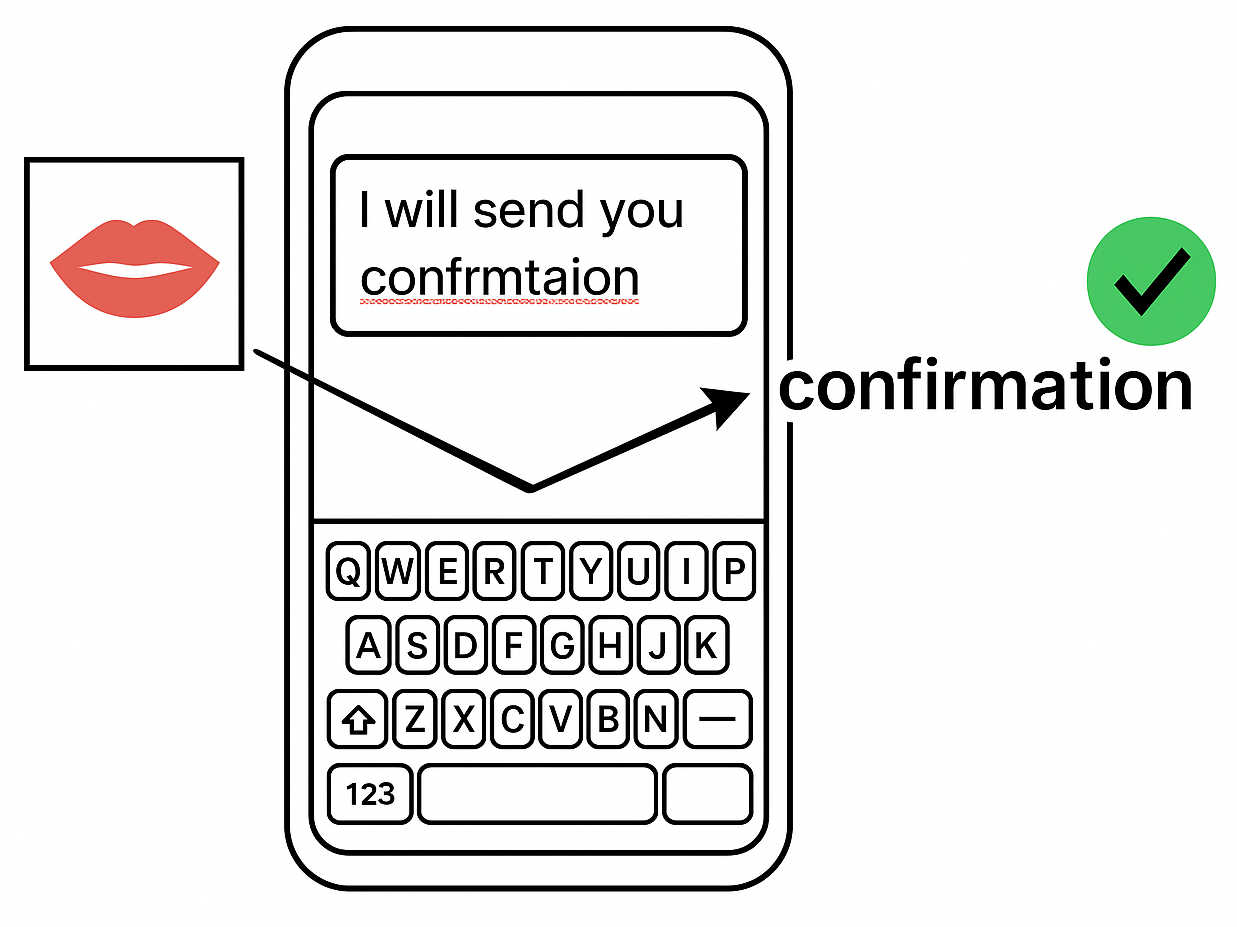

What the demo shows

- Messy input, clear intent. Fast, imperfect typing that normally fails to suggest the right word.

- Lip-guided selection. Silent mouth cues combine with keystrokes so the system selects the intended word—even if it wasn’t on the list.

- No talking required. Works from lip cues combined with typing; whisper support is optional for private contexts.

- Fewer interruptions. With strong lip evidence, the system commits the right word automatically—no suggestion bar needed.

- Note: The lip video panel is shown here as a behind-the-scenes visual aid. In normal use, processed lip cues are not displayed on screen.

What the demo shows

- Messy input, clear intent. Fast, imperfect typing that normally fails to suggest the right word.

- Lip-guided selection. Silent mouth cues combine with keystrokes so the system selects the intended word—even if it wasn’t on the list.

- No talking required. Works from lip cues combined with typing; whisper support is optional for private contexts.

- Fewer interruptions. With strong lip evidence, the system commits the right word automatically—no suggestion bar needed.

- Note: The lip video panel is shown here as a behind-the-scenes visual aid. In normal use, processed lip cues are not displayed on screen.

For OEMs & Platforms

Retrofit ready

Integrates as a fusion layer on top of existing autocorrect engines—no full rewrite required.

Privacy aligned

All processing runs on-device. Frames are transient and never stored or sent.

Accuracy lift

Resolves messy input cases that frustrate users today, improving satisfaction across your ecosystem.

LipConfirm is available for integration to enhance typing experiences across devices and platforms. Fewer suggestion prompts mean lower cognitive load and a smoother typing experience.

Patent & IP

Patent pending. Extensive technical specification covering multiple detailed fusion architectures.

FAQ

Is this just voice dictation?

No. LipConfirm works silently from lip cues (visemes) fused with keystrokes, without requiring audible speech. Whisper input is optional, not required.

Does this require the internet or cloud processing?

No. All processing runs fully on-device in real time. No frames or audio are stored or transmitted.

Will this drain battery or slow down typing?

No. The fusion engine is lightweight and optimized for mobile hardware. It runs only while typing and consumes negligible power.

Is my camera always on?

No. The camera activates only during typing sessions, and only with user consent. Frames are analyzed instantly and discarded.

How is this different from existing autocorrect?

Traditional autocorrect only re-ranks from a fixed suggestion list. LipConfirm harnesses a richer data set, fusing typed input with lips (and optional whisper) to more accurately select the intended word—even when it isn’t on the autocorrect list. It also reduces reliance on suggestion lists by auto-committing when confidence is high.

Do I need to perfectly mouth the words?

No. The system captures multiple cues from your lip movements — like syllable counts and key phoneme shapes — to help disambiguate your intended word alongside your typed input. Lip input is natural and easy to get used to, and it’s far more convenient and faster than having to backtrack and retype on a mobile keyboard.

Can I just use lip cues without typing?

No. LipConfirm is fundamentally a fusion architecture. Keystrokes are always required as the primary input signal. Lip cues (visemes) and optional whisper audio are auxiliary signals that dramatically improve recovery in messy typing cases, but on their own can produce too many ambiguities and errors to be practical. The unique value comes from our developed and patent-pending fusion system, which efficiently combines these streams in real time to achieve accuracy that typing alone cannot.

Why is whisper audio part of this?

Whisper input is optional and exists for maximum flexibility across environments. In low-light situations, lips may not be visible but a quiet whisper can be captured. In very noisy places, whispers may not work but lip cues still do. Lip visemes remain the primary signal, but whisper audio provides an extra layer of robustness to make LipConfirm work universally.

How is AI used here?

LipConfirm uses on-device ML models to fuse typed input with lip cues. Unlike traditional autocorrect, which only re-ranks words from a fixed list, our fusion model learns patterns of visemes, syllables, keystrokes, and optionally whisper audio all working together to directly select the intended word — even when it isn’t on the autocorrect suggestion list.

Does the user need to train or calibrate the model?

No. LipConfirm is designed to work out-of-the-box without user training. The on-device ML models are pre-trained to generalize across users, capturing common viseme and typing patterns. Optional personalization can further refine accuracy, but calibration isn’t required.

How much accuracy improvement does LipConfirm provide?

Traditional autocorrect fails when messy typing produces words outside its suggestion list, often forcing the user to fully backtrack and retype. By fusing typed input with lip cues (and optionally whisper audio), LipConfirm is designed to recover those missed cases — the ones that cause the most frustration today. In modeling tests described in our patent, fusion architectures consistently showed substantial lifts in word recovery compared to standard autocorrect, especially in challenging inputs.

Is LipConfirm only for mobile phones?

Mobile keyboards are the primary target because fast typing on small screens, especially in portrait mode, causes the most frustration today. But LipConfirm is designed as a general fusion layer that can work in any typing environment — including tablets, laptops, and even wearable devices.

Will I still see suggestion lists?

Less often. When the fusion signal is strong, LipConfirm auto-commits the intended word so you don’t have to pick from a list. If confidence is lower, the normal suggestion bar appears as a fallback, but enhanced with more accurate choices compared to keyboard input alone.

Is this patented?

Patent pending currently with an extensive application detailing multiple technical models.

Contact

Email: hello@lipconfirm.com

LipConfirm was conceived and developed by independent inventor and engineer Andre Persidsky, an active creator with multiple ventures spanning software, hardware, and digital health.

LipConfirm

LipConfirm